Font Metrics & DAT File Format

By Geep 2024

Overview

TDM provides a number of fonts (Fonts_Screenshots) that can be used, in conjunction with GUI code, on in-game surfaces, as HUD elements and messages, and subtitles. (There are additional aspects of menu and console fonts not covered here.)

While it is possible to design a TDM bitmap font from scratch, historically, a number of such fonts were built by starting from a TrueType Font. That outline-style font was not read into the engine directly. Instead, it was rendered into a set of bitmaps externally, with a conversion tool, and stored as DAT metric and TGA (really, DDS) bitmap image files. Commonly, there were post-conversion minor or major adjustments. More about this process is described in Font Conversion & Repair.

Once deployed, font files are then read by the engine at startup. In response to any specific GUI request, the engine applies the required character selection, scaling, transformation, and on-screen layout. While bitmap fonts are perhaps not optimal for font quality, compared to outline fonts, this approach had some likely advantages:

- In early Doom days, it no doubt avoided a performance hit if rendering from font outlines.

- It avoided dependency upon particular fonts pre-existing on the user’s system.

- It did not force use of TrueType; fonts could be created by other means.

- Even if started by a TrueType conversion, font characters could be made accessible for further adjustment with text and bitmap editors.

- It exposed the TGA/DDS to character customization, including to support a wider set of European and Russian languages. (See TDM-specific codepoint mappings I18N - Charset.)

Be aware that the idTech4 system doesn’t support kerning (i.e., adjustment of the spacing between any two characters to accommodate their shape particularities).

TDM Font Metrics

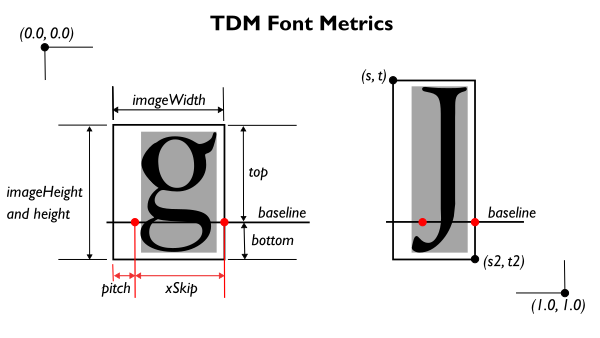

Figure 1. An abstract view of two typical character glyphs, like those found in any of a font's DDS files.

To understand the Doom3/idTech4/TDM font metrics, let’s start with a simplified view into a DDS bitmap [Figure 1], which is always 256 x 256 pixels in size and holds multiple character glyphs. With the exception of "baseline", all the named items shown comprise the fields associated with each character’s DAT entry. Most fields (those shown around the "g" image) are distances measured in typically-positive integer pixels. Corner coordinates (shown around the "J" image) are reported in 1/256 units, as a decimal value with 6-digit precision.

In the figure, the black-outlined box represents a glyph's defined bitmap, while the gray zone represents the tight rectangular bounding box around the glyph, covering all its pixels that have non-zero alphas. In this example, both glyphs here show a small padding in white (e.g., of 1 pixel size) around 3 sides. Portrayed is a somewhat bigger padding on the left, which is not uncommon.

Of particular importance, there are two red dots on the baseline, controlling where the character is ultimately laid out on a line. The left dot, the "origin", is defined by a positive "pitch" distance (also known more aptly in some tools as xOffset). For most characters, there is typically a gap between the origin and the gray bounding box (e.g., shown let’s say as 1 pixel for "g"). If a descender (in the "bottom" region) needs to be under a preceding character, as in the "J" example, pitch can arrange that as shown.

The right dot, located relative to the origin dot by a positive "xSkip", will determine where the origin of the next character in-line will be placed. The right dot is shown in this figure as aligned with the right side of the defined bitmap, which (given right-side padding) is usually the minimum case, e.g., for 12pt size, or handwritten font or other tightly-spaced font. And, if the font had a tall version of “{“, you might want xSkip to be slightly less to bring the next rendered character within its embrace. Conversely, xSkip often places the dot 1, 2, or more pixels beyond the imageWidth boundary, for larger sizes or loosely-spaced fonts.

The details of the padding size can vary, depending on how the DDS was originally constructed (e.g., with certain options of a particular TrueType conversion tool) and subsequently altered. There may be no padding on the bottom, top, and right side, particularly for 12pt font. Or more padding for 48pt font. Or the top padding for lower-case characters "g" may be very much enlarged to match that for upper-case characters like "j".

In a well-formed DAT file, "height" and "imageHeight" of a given character will always have the same value. (Inside the engine, there are other uses for this data structure, where a difference between imageHeight and height can indicate internal scaling. Similarly, internally, the "pitch" field can take on a different meaning: to indicate an optimized bitmap-transfer width in bytes. Perhaps the name "pitch" makes more sense in that context.)

The DAT File Format

A DAT file is a fixed-size binary file. It begins with 256 blocks of 80 bytes each. There is one block for each glyph, in assumed ascending codepoint order. Each block contains this layout:

int height int top int bottom int pitch int xSkip int imageWidth int imageHeight float s float t float s2 float t2 int glyph // placeholder, simply zero char[32] shaderName // DDS filename for this specific glyph; see description below.

At the end of the file, there is:

float glyphScale char[64] name // this file's TDM path and name but without DAT extension, e.g., "fonts/fontImage_24"

The ints here are each 4 bytes in size, e.g., 32 bits, not 64. Floats are also 4 bytes, as is normal. (When ultimately read into the engine, the integers will end up as floats, in a more general data structure font_info_t.)

Understanding the S, T, S2, and T2 Values

In the DAT file, a given character’s s, s2, t, and t2 are all float values in the inclusive range [0.00000 to 1.000000]. Values outside this range can cause anomalous rendering. Float precision implies, given that there’s only one digit to the left of the decimal point, that 6 digits after the decimal point should have meaningful content (not just zeros or junk).

Best practice is to set these values as equal to n / 256.0, where n is an integer in the inclusive range [0 to 256]. This is what ExportFontToDoom3 does when first generating a DAT file; Font Patcher and the REF file format of Refont also maintain this.

But the FNT file format of Q3Font allows arbitrary values to be set, so it’s the Wild West. This is regrettably reflected in some actual DAT files. Fortunately, the engine has tolerance for squishy values and provides its own rounding. Nevertheless, when changing these values, it is recommended you provide an n' / 256.0 to full precision, to minimize risk of rounding errors.

s2 >= s and t2 >= t // all are zero if codepoint is skipped

It is perhaps surprising that 256 instead of 255 is used as the divisor. There are two equivalent ways to think about this:

The bitmap, of size 256 x 256, can be visualized as a grid of square pixels. Consider the grid lines (not the pixels) as numbered 0 to 256. Then (s,t) and (s2,t2) are the grid points defining the overall sub-bitmap of interest.

Alternatively, given a pixel array "pix[nx,ny]", then pix[s,t] defines the upper-left pixel to include in the desired sub-bitmap, while pix[s2,t2] defines the lower-right pixel to exclude. In this formulation, (s2, t2) of floating representation (1.0, 1.0) becomes pix[256,256], in a "ghost" column and row outside the actual array.

Other Expected DAT Values

Here is what you should expect in a well-formed DAT file.

The "glyphScale" at the end has one of three values, which presumably ties the font data into a GUI’s "textscale" value:

- For 48 pt: 1.000000

- For 24 pt: 2.000000

- For 12 pt: 4.000000

For Each Glyph

Integer values are generally in the inclusive range [0 to 256]. There are occasional exceptions, e.g., a negative value of "top" for underscore, that is entirely below the baseline.

The meaning and best practices for float value s, s2, t, and t2 were described in the previous section.

s2 >= s and t2 >= t // all are zero if codepoint is skipped

A codepoint for which no unique glyph is provided is typically directed to either a space, a hollow box glyph, or zero-dimension image. Or to a substitute glyph; for instance, an ASCII unaccented character may stand-in for a missing European accented character.

Other expectations:

imageHeight = height

In most cases (but see per-character scaling below):

imageWidth = round(s2 * 256) - round(s * 256) // where “round” forces to nearest integer value. imageHeight = round(t2 * 256) - round(t * 256)

Ideally, this would also be a requirement:

height = top + bottom

However, in practice, bottom is not used within the engine, and so, being informational only, is often incorrect in the DAT file. Instead, the engine relies on:

baseline = height - top

Also, as discussed above:

pitch + xSkip approximately equals or slightly exceeds imageWidth

Finally: "glyph" is left zeroed; inside the engine, it becomes a pointer to the in-memory version of the data.

"shaderName" is a TDM-style relative path to the specific DDS file containing the glyth. It always begins with "fonts/" and gives the extension as ".tga", even for DDS. Example shaderName:

fonts/Stone_0_24.tga

(When the engine reads this, internally the "fonts/" prefix is stripped off.)

Per-Character Font Scaling

Overall scaling of a font is left to a .gui’s "textscale" at runtime. However, it is possible to indicate in the DAT that particular characters should first have additional scaling applied. That is, instead of resizing a specific glyph itself in the bitmap DDS file, the engine is told to do it in memory.

There are only two TDM fonts that have such scaling: mason and mason_glow, used in the main menu. For these fonts, upper case characters were scaled by a requested 1.20 in both direction, using Font Patcher. The reason given was "to match the original more closely". Presumably, this meant the relative sizes of the upper and lower case characters in the Doom menu font.

To achieve this, in the "english/mason_48.txt" command script for Font_Patcher.pl, there are a series of commands like:

scale 0x41 1.20 1.20 // where 0x41 is A

This scaling was applied to DAT metrics of all 74 upper-case English/Latin characters. (Aside: Other unrelated DAT changes - relative to the DAT for the original "font_source" bitmaps - were also applied in this script, and aligned with the revised bitmaps in what was first called "masonalternate_48.xcf")

Font Patcher's "scale" command does the following for the character in question (where sx and sy are the command’s scaling parameters):

height *= sy; imageHeight *= sy; imageWidth *= sx; pitch *= sx; xSkip *= sx;

Note that this does NOT change top or bottom, nor s, s2, t, or t2.

Finally, it is important to point out that each of these scaling calculations usually results in a non-integer value, that is then converted to integer. (With Font Patcher, this quantification seems to be done by Perl's "unpack" function; how it truncates or rounds to get its results may be system dependent.)

More about the DDS File Format

See

- Font Conversion & Repair for how to view and adjust DAT values, as well as important constraints of changing metrics on long-deployed fonts.

- Font Files for DDS file naming, directory location, scaling, and usage by TDM.

- Font Bitmaps in DDS Files about color and alpha representations, with examples.